Performance

🧚 Guide on the meanings and logic of Performance Indicators from the dimensions of Task, Data, Objects and Worker.

Under the Performance tab of a single Task page, you can view performance statistics in Task&Worker dimensions, including Data Performance, Object Performance, and Accuracy, etc. It also supports filtering and displaying performance statistics based on Data Completed/Submitted, Data Validity, Data Stages, and Submitted/Completed Time.

Personal Performance & My or Team Tasks PerformanceFor a worker, clicking the

View Performancetab on the top right corner of the To-do page will jump to the Personal Performance page, which displays the performance statistics from the perspective of a worker him/herself.For Task Admin and Team Admin, clicking the

View Performancetab on the top right corner of the My/Team Tasks Management page will jump to the My/Team Tasks Performance page, which displays the performance statistics from the perspective of a Task Admin or Team Admin.These performance pages' display and logic are similar to what is concluded and explained below.

Term Explanation

Firstly let's take a look at the meanings of general Performance Indicators on BasicAI:

| Performance Indicator | Meaning Elaborations |

|---|---|

| Task Performance | Performance statistics summarized at the Task level. |

| Worker Performance | Performance statistics summarized at the Worker level. |

| Completion Performance | Completion Performance statistics are calculated after data are accepted in the Acceptance stage. Project settlement should be based on Completed Performance. The time filter for Completed Performance is the time when data are accepted. |

| Submission Performance | Submission Performance statistics are calculated from the data are submitted in the first Annotation stage till they are accpeted, only for reference of the project procedures. The time filter for Submitted Performance is the time when data are submitted at a certain stage. |

| Data Performance | Performance at the data level, such as the number of submitted/completed data, valid work durations, pass rate, redo counts, etc. |

| Object Performance | Performance at the result level, such as the quantity of added, deleted, and modified results, which can be viewed based on object types (3D boxes, 2D boxes, rectangles, polygons, polylines, etc), or classes (cars, trucks, pedestrians, etc). |

| Accuracy | * By Data: Assuming that a user sets the data accuracy baseline to 95%, if there are 100 bounding boxes in the data and less than 5 errors/comments, then the data is considered accurate. Data Accuracy = accurate data counts/total data submitted counts.\n* By Object: If a box is not marked with an error comment, it is considered accurate. Object Accuracy = 1 - object counts with error comments/total object submitted counts.\n\nIn addition, for Segmentation of LiDAR Data, LiDAR Point Accuracy = 1 - number of wrong Points/ total number of annotated Points. |

| Data Validity | Validity of data, labeled on the tool page, including Valid, Unknown, or Invalid. Unknown is regarded as Valid when calculating Data Validity. |

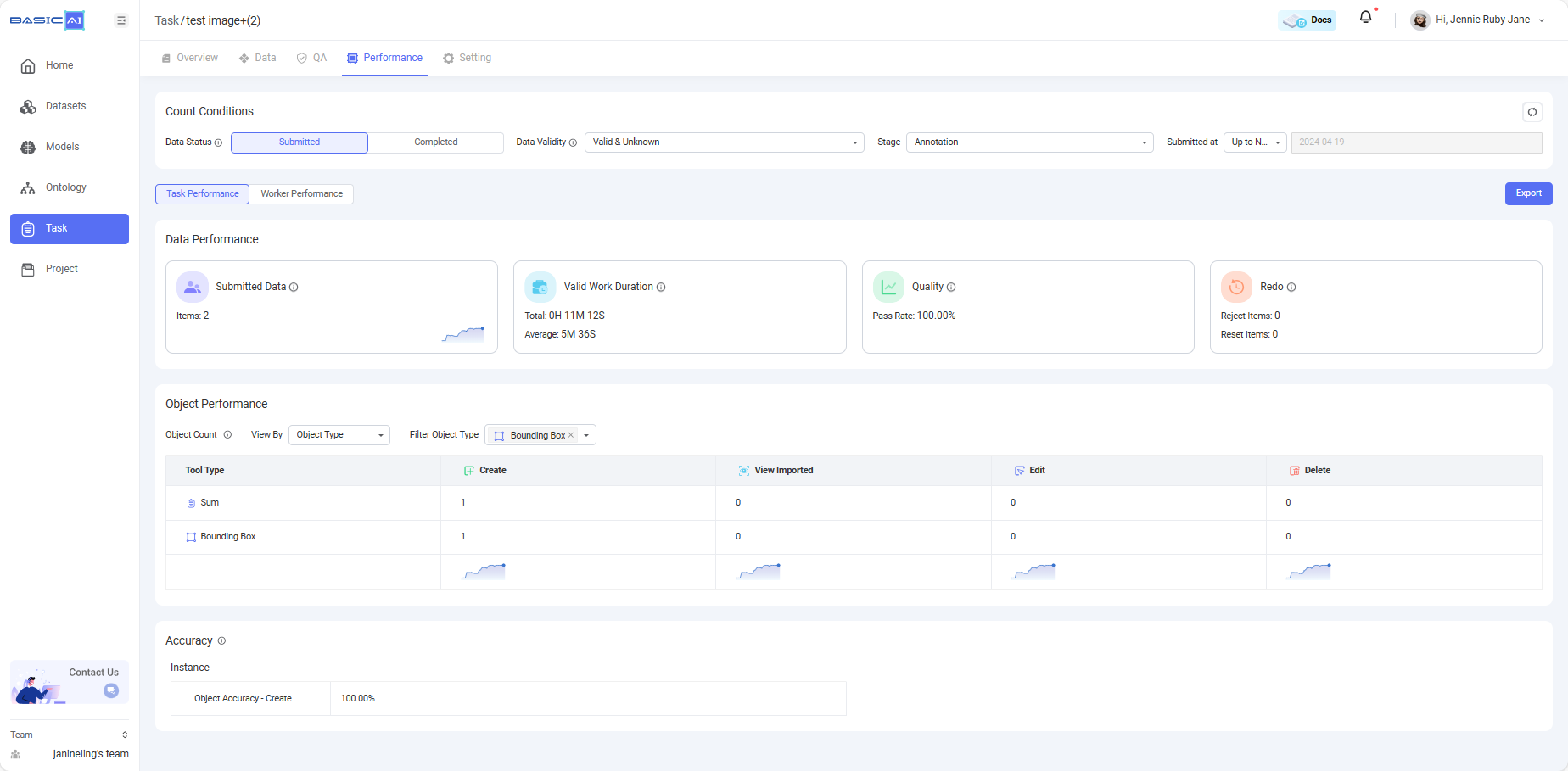

Task Performance

Under the Task Performance tab, data-wise and object-wise performance information is available. Data Performance statistics include submitted data item counts, valid work durations, pass rate as quality, and redo counts. The Object Performance chart indicates the specific counts of actions related to different tool types and their corresponding data types. The Submitted tab allows users to check the performance statistics regarding the data in the annotation, review or acceptance stage, while the Completed tab shows performance regarding completed data which go through all the stages.

1. Data Performance

| Indicator | Description |

|---|---|

| Submitted/Completed Data | Number of data items that were submitted/completed during the filtered time period. |

| Valid Work Duration | Total: The cumulative work duration\Average = Total Work Duration / Number of Data Items. This represents the average work duration for an item. |

| Quality | Pass Rate = 1 - Total Number of Redo Times in the Stage / Total Number of Submission Times.**ACC Pass Rate**: Total Number of Data in the ACC stage / Total Number of Submission Times. |

| Redo | Rejected Items: Number of Items that were rejected or reassigned.**Reset Items**: Number of Items that were rejected or reassigned and had their results cleared to an earlier stage. |

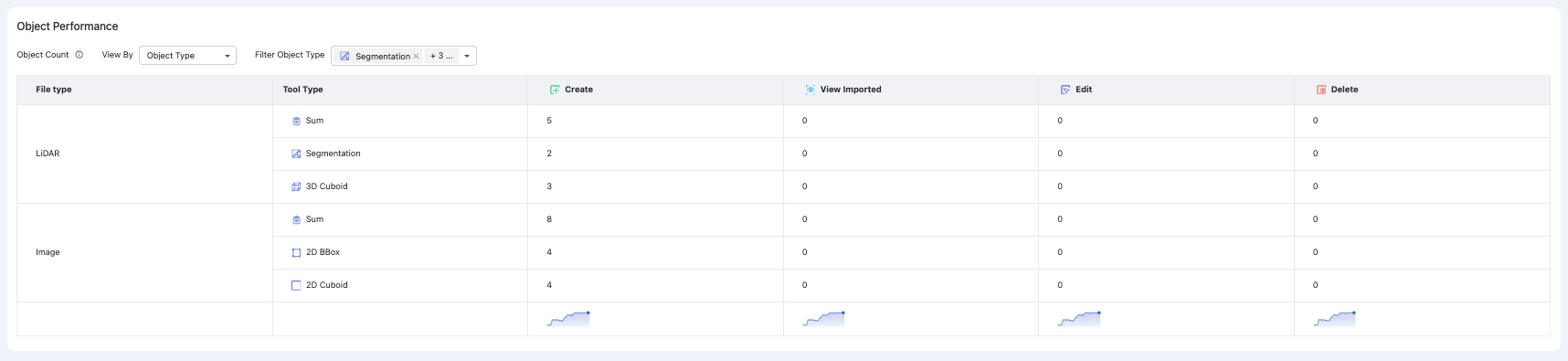

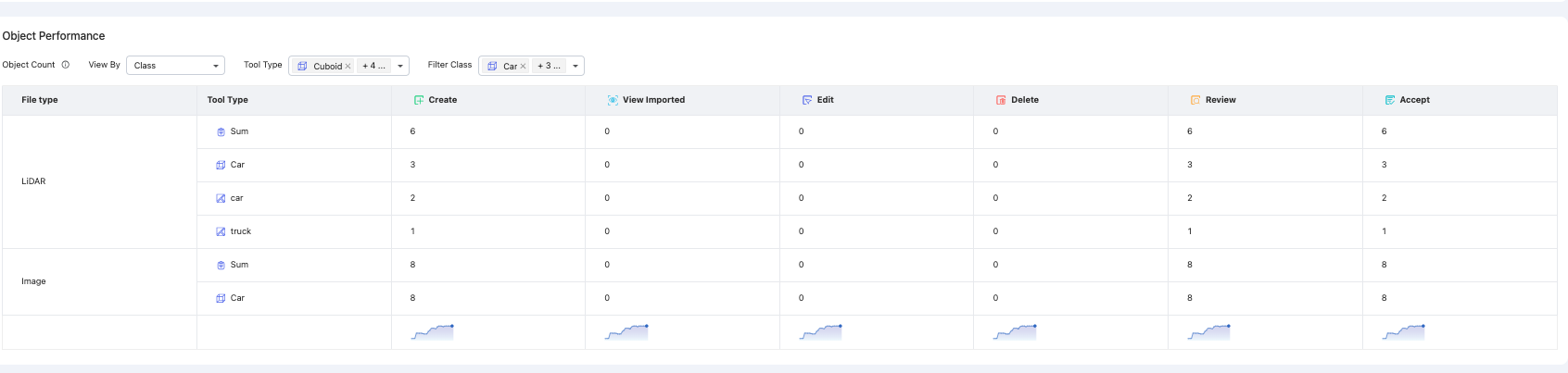

2. Object Performance

The object performance for each stage can be broken down into the following dimensions, and can be viewed according to different object types or classes.

| Stage | Dimension | Description |

|---|---|---|

| Annotation | Create | Number of new objects created by an annotator |

| View Imported | Number of pre-annotated objects | |

| Review | Review | Number of objects reviewed and passed by a reviewer |

| Create | Number of new objects created by a reviewer in the modification mode | |

| Edit | Number of objects edited by a reviewer in the modification mode | |

| Delete | Number of objects deleted by a reviewer | |

| Acceptance | Accept | Number of objects accepted by an inspector |

| Create | Number of new objects created by an inspector in the modification mode | |

| Edit | Number of objects edited by an inspector in the modification mode | |

| Delete | Number of objects deleted by an inspector |

3. Accuracy

For data submitted in the annotation or review stages before ACC, Accuracy under Task Performance displays only Object Accuracy. For accepted and completed data, Accuracy under Task Performance displays both Data Accuracy and Object Accuracy. The meaning and calculation logic of Data Accuracy and Object Accuracy can be found in the Term Explanation section above.

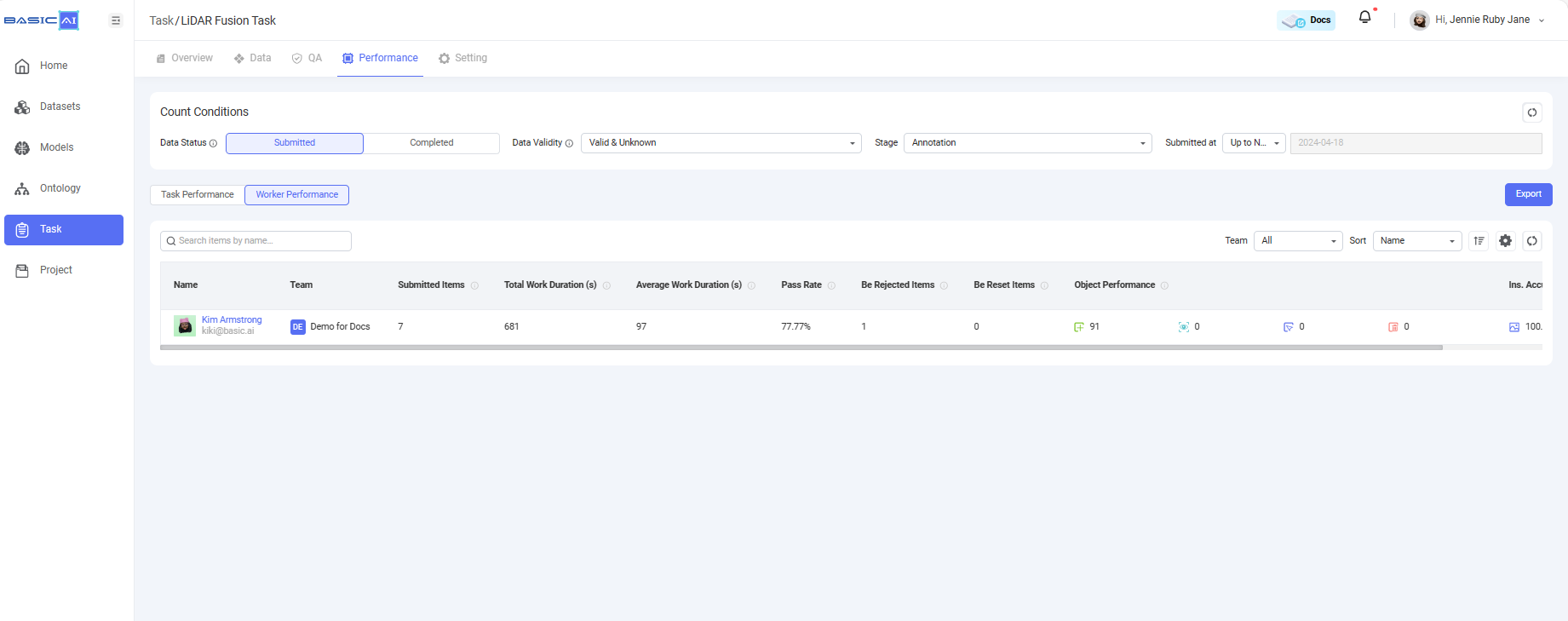

Worker Performance

The Worker Performance section displays all the performance statistics in regard to each individual worker in the task who has submitted data, including submitted/completed item counts, total&average work durations, pass rate, rejected item counts, reset item counts, object performance, and object accuracy. The meanings of these concepts are the same as those in Task Performance explained above.

Updated 9 months ago