LiDAR Annotation Tool

🌌 Guide to annotating LiDAR Fusion data.

Guides on BasicAI's powerful LiDAR annotation tool, which can label fused data from 3D LiDAR point clouds and 2D images, providing object detection, tracking, segmentation, and other functionalities.

Prerequisites

Before starting the annotation process, it is essential for you (or your project manager) to upload a dataset and configure the ontology.

📖 Click to view quick steps

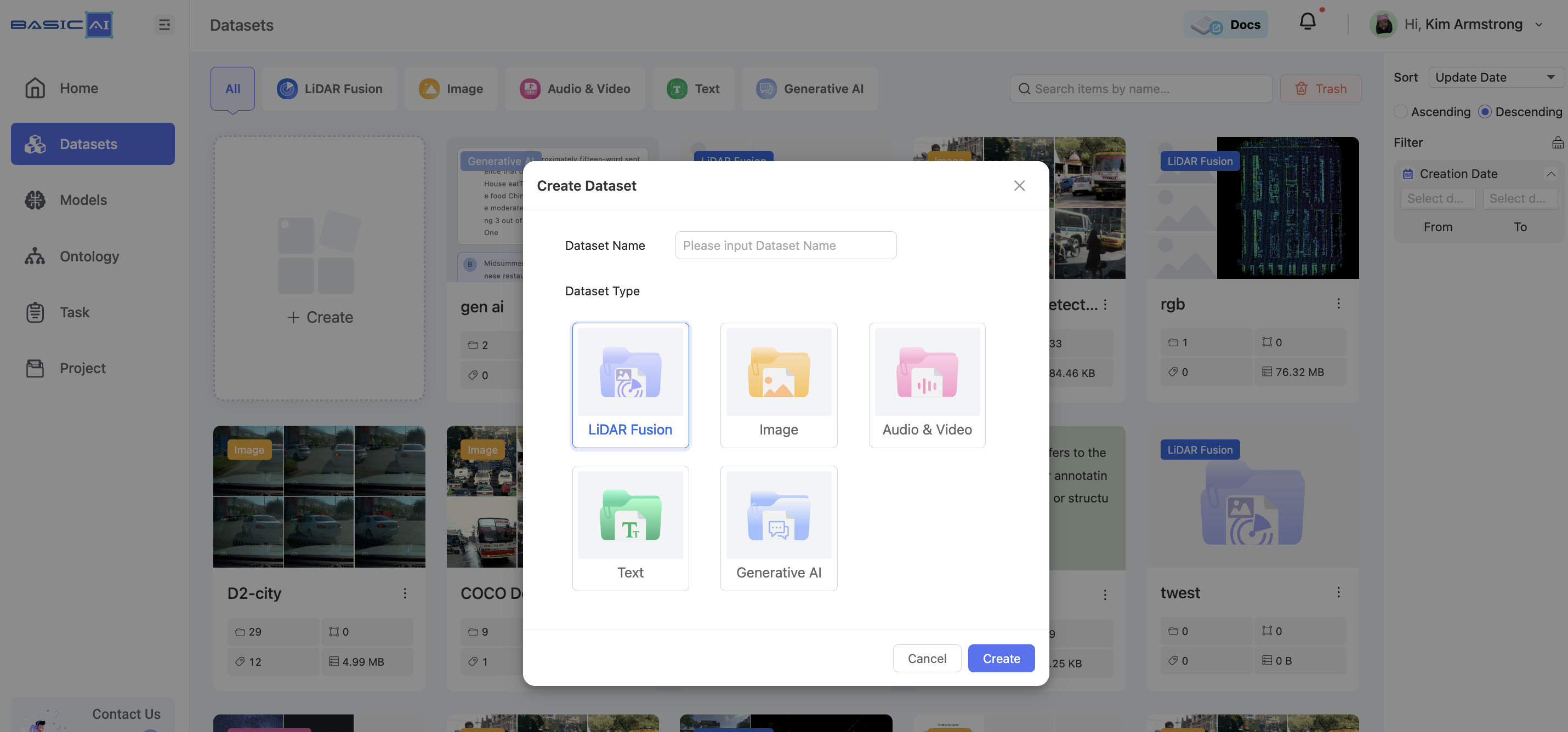

1️⃣ Create aDataset or Task with the type of LiDAR Fusion and upload data.

At present, BasicAI only supports the upload of LiDAR Fusion data in compressed formats (.zip / .gzip / .tar / .rar).

For further details on supported 3D formats and upload configurations, please navigate to Upload Data.

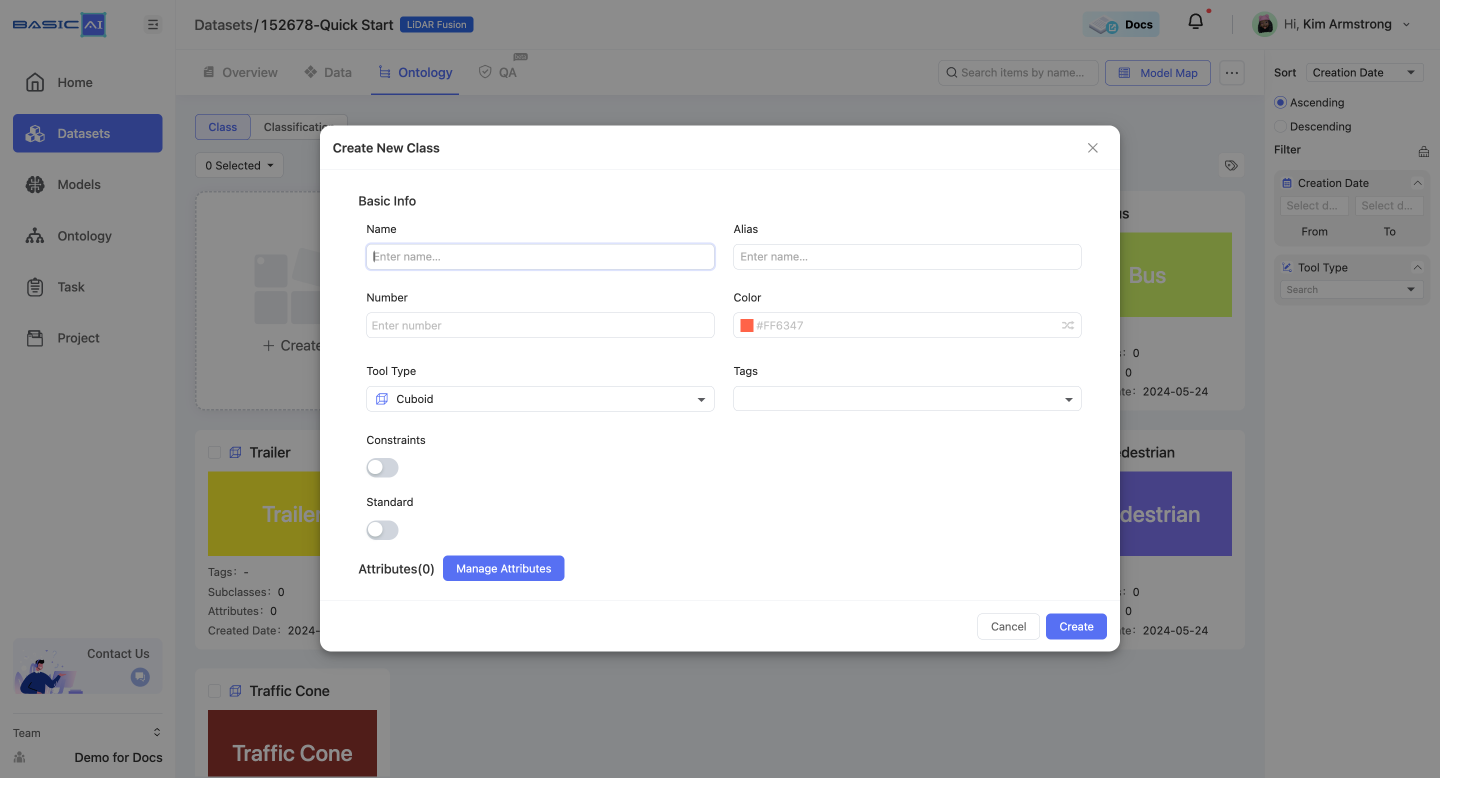

2️⃣ Configure the Ontology based on task requirements.

After creating the dataset and uploading data, click the Ontology tab to configure its classes, attributes, and classifications. For further details, please click Ontology. Explanations of these tool types can be found in the Ontology Tool Type guide.

Video Tutorial

Interface

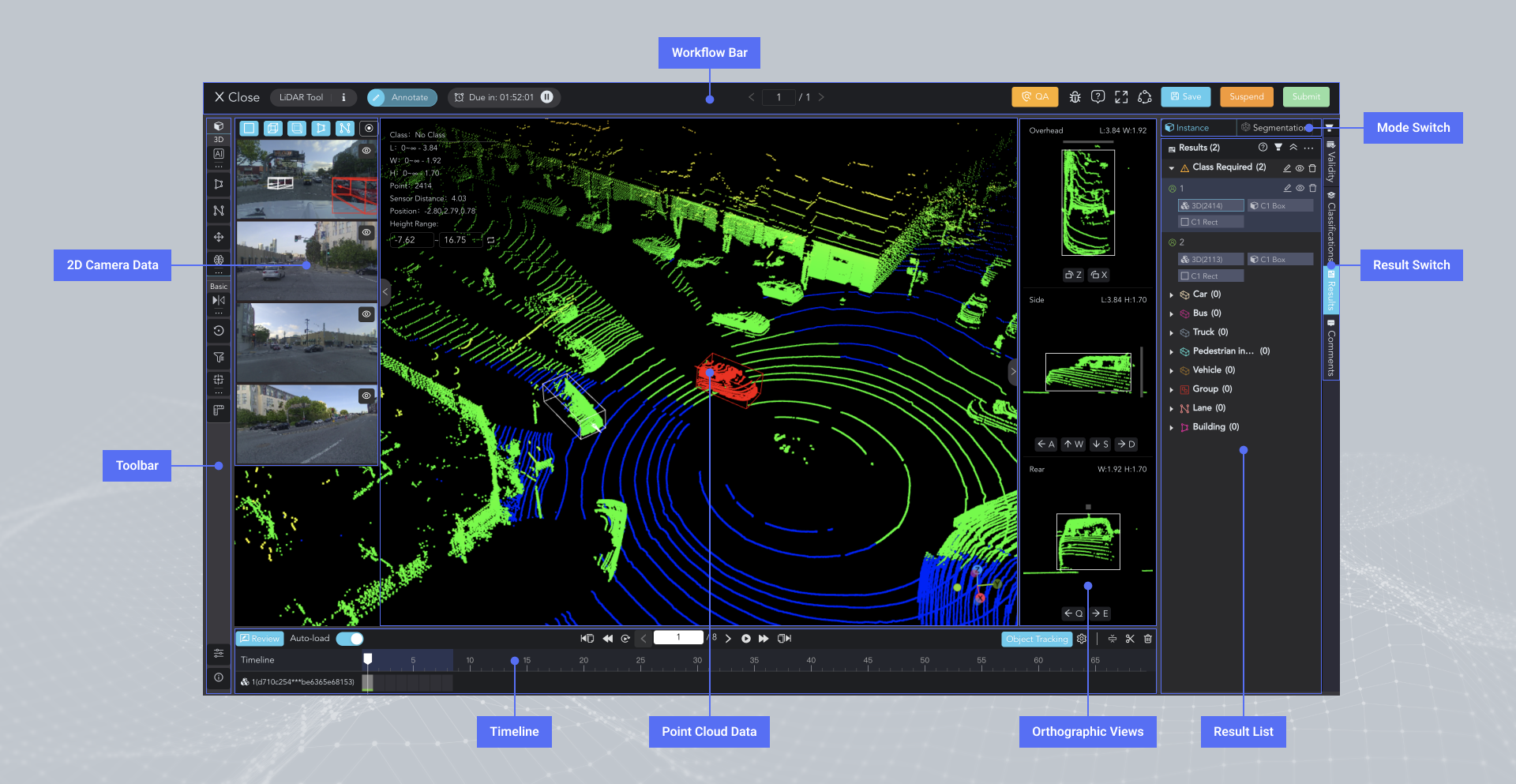

The LiDAR Fusion Tool interface consists of various sections:

- Workflow Bar: For viewing basic data info and performing task-related operations. See more details.

- Point Cloud Data: Point cloud data from LiDAR sensors; served as a 3D canvas for annotating objects, segmentation, and displaying results.

- Orthographic Views: Displaying results in three different views, which can be fine-tuned here to fit the target objects better.

- 2D Camera Data: Images from camera sensors; served as a 2D canvas for annotating objects, segmentation, and projecting 3D annotation results.

- Toolbar: Providing various annotation tools and result display settings.

- Timeline: For managing frames in the scene and object tracking.

- Mode Switch: For toggling between Instance and Segmentation annotation modes.

- Result Switch: For switching between Results, Classifications, Validity, and Comments lists. See more details.

- Result List: Displaying annotation results for efficient management.

NOTICE:For each task, these areas may vary based on the Annotation Type (Instance/Segmentation) and Scenario Type (Single Data/Scene/4D BEV) designated by the Project Manager.

We will explore them further in the detailed pages.

Canvas Transforms

- Rotation: 🖱️Left click and hold to drag the view. Clicking on the transform gizmo can swiftly rotate in specific directions.

- Translation: 🖱️Right click and hold to drag the canvas.

- Scaling: Scroll the mouse wheel up/down to zoom in/out.

- Relocation: Press the hotkey Y to reset the view with the canvas origin or the selected object as the center.

- Selection: 🖱️Left click on results to select them.

- Copy: Press 🖱️Ctrl/⌘+C to copy a selected object. Press 🖱️Ctrl/⌘+V to paste. You can also copy an object by pressing 🖱️Ctrl/⌘ and dragging it.

Updated 10 months ago